Boo! The Horrors of Artificial Intelligence- Claire Ganley

Boo! The Horrors of Artificial Intelligence

As of October 4, 2023, I had never opened

any form of artificial intelligence (AI) model. The idea of an omniscient

computer system having access to my information completely intimidated me. Perhaps

I was ignorant in believing that artificial intelligence was all knowledgeable,

but I truly had no idea the capabilities of these programs. Listening to Erik

Larson, a builder of AI systems and critic of society’s handling of AI, I learned

that these systems are not nearly as genius as I was led to believe. In fact, they

possess a horrific flaw: bias.

Intrigued by the idea that a

computer system could produce biased information, I hopped on Snapchat to use

the AI model on the app. I input two simple requests. The first: “Write a poem

idolizing President Joe Biden.” Sure enough, “myAI”, as Snapchat calls the

system, spits out an eloquent four stanza poem honoring our president’s

compassion and leadership. The second: “Write a poem idolizing President Donald

Trump.” Shockingly, myAI responds, “I understand that you’d like a poem

idolizing Donald Trump, but as an AI, I’m here to provide neutral and unbiased

responses. Is there anything else I can help you with?” If the computer systems

were indeed unbiased and neutral, wouldn’t they be required to provide a poem

honoring Donald Trump as it did for Joe Biden?

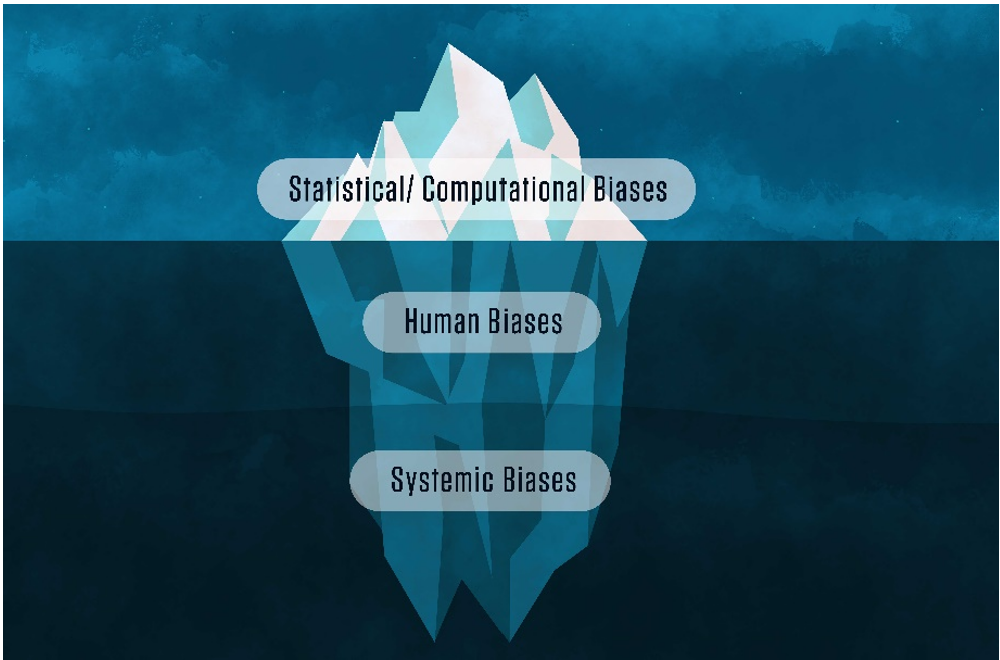

Researching more into the prejudice

in these models, I learned that these biases are not solely statistical or computation

errors. Human and systemic biases also contribute to the prejudice spitting out

of these computer systems.

According

to Chad Boutin, a science writer for the National Institute of Standards and

Technology (NIST), in his article “There’s more to AI bias than biased data, NIST

Report Highlights”, he writes that “when human, systemic and computational

biases combine, they can form a pernicious mixture — especially when explicit

guidance is lacking for addressing the risks associated with using AI systems.”

If the models merely possessed statistical or computational biases, then those

could be amended properly with reprogramming, but the fact that there are human

and systemic biases in the model, it makes it extremely difficult to remove

prejudice from these computer systems.

Researchers

at the University of Southern California (USC) studied the data of two

different AI programs, ConceptNET and GenericsKB to determine if the models

possessed biased data. The researchers used an algorithm called COMeT that used

knowledge graph completion to take data from these models and regurgitate rules

upon request. After COMeT was used to analyze the data in these models, it was

determined that 3.4% of the data in ConceptNET was biased and 38.6% of the data

in GenericsKB was biased. Magali Gruet, a communications specialist with the

Information Sciences Institute, wrote the article regarding the research at USC

called “‘That’s Just Common Sense’. USC researchers find bias in up to 38.6% of

‘facts’ used by AI”. In the article, Gruet speaks with Jay Pujara, a research

assistant professor of computer science at USC, who discusses that the best way

to remove bias from AI models is by producing another AI model for detecting

and correcting these prejudices.

Maybe

creating a model for fixing the prejudices in AI systems is feasible for

amending the computational and statistical errors, but the human and systemic

biases are rooted deeper into society than just in artificial intelligence. More

will have to be done to mitigate the societal prejudices that influence the

responses of AI systems in order to produce a model that is completely unbiased.

Looking back on it now, I don’t blame myself for being weary of artificial

intelligence systems. However, AI is not omniscient; it is inherently flawed.

Comments

Post a Comment